Yunfei Guo

Ph.D. in Computer Engineering at Virginia Tech, specialized in Robotics.

Location: Blacksburg, Virginia

Email: yunfei96@vt.edu

Bio

I earned my Ph.D. degree through dedicated research conducted in the Robotics and Mechatronics Lab at Virginia Tech, under the expert guidance of Prof. Pinhas Ben-Tzvi. My academic journey centered on a diverse range of disciplines, with a core focus on Mechatronics Design, Robotic System Integration, Software Development, and Machine Learning Techniques, including Reinforcement Learning. My research focus is on the system integration of medical robotic exoskeleton gloves. I have extensive experience in designing applied control systems for exoskeletons, including electronics design, PCB fabrication, and developing parallel control programs, data-driven control algorithms, and high-level reinforcement learning-based motion planning. Additionally, I have experience in developing vision and voice-based Human-Machine Interfaces (HMI) for exoskeletons utilizing deep learning techniques.

Research Summary

Development of an Assistive Robotic Exoskeleton Glove for Patients with Brachial Plexus Injuries

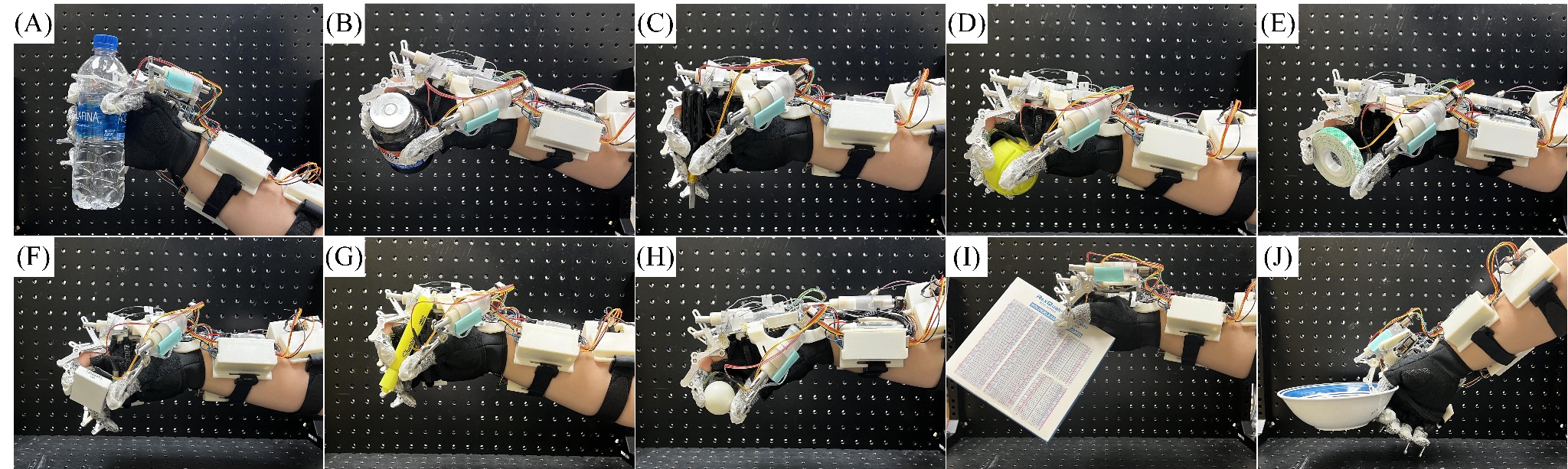

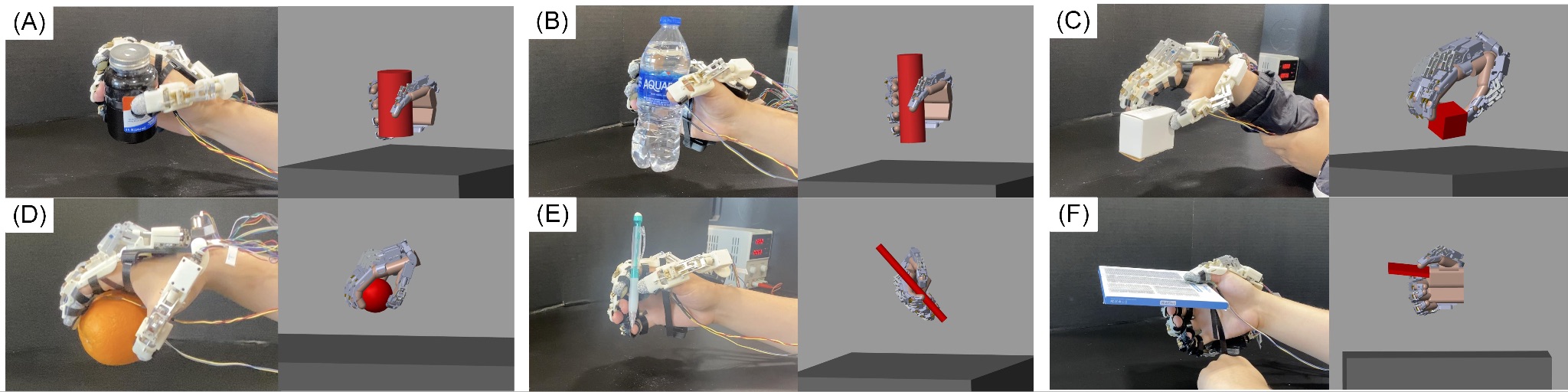

Brachial Plexus Injury (BPI) is a hand disability usually caused by motorcycle accident. Patients suffering from this disease experience nerve system cut-off at hand, causing no control of the hand muscle. Exoskeleton glove is a physical therapy device for patients with BPI to avoid muscle atrophy. My team and I designed two exoskeleton glove prototypes to help paitients with BPI (shown in Fig. 1 and Fig. 2). The inital prototype was designed and used in clinical experiments. The final prototype was designed based on patient feedback collected during the clinical experiments. Multiple control algorithms and Human-Machine Interfaces are developed. For more details, please visit my Google Scholar.

Figure 1: The initial exoskeleton glove prototype, featured side-mounted linkages and Series Elastic Actuators (SEAs)

Figure 2: The final exoskeleton glove prototype, featured top-mounted linkages, the control method is trained in MATLAB Simscape simulation environemnt using a deep reinforcement learning algorithm

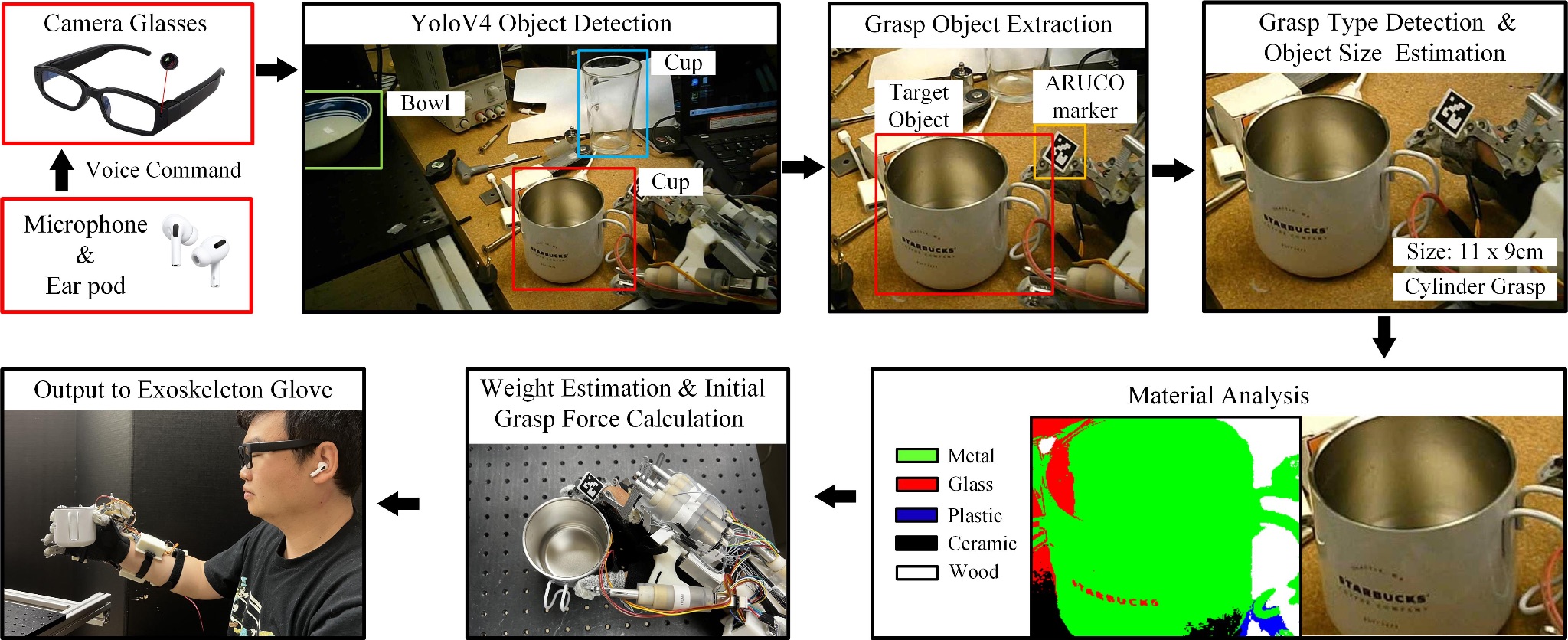

Vision-based Human Machine Interface Designed to Assist Force Planning on Robotics Exoskeleton Glove

This research proposed a computer vision based algorithm to assist force planning on exoskeleton glove by estimate the size, surface material, and size of the target object. Traditional force planning required the size, surface material, and weight of the grasp object in order to calculate the grasp force. However, it is not possible to gather these information without explicit measurment of the target grasp object. This research proposed a computer vision method to perform object detection and surface material detection to esimtate the size, surface material, and weight of the target object. The system overview is shown in Fig. 3.

Figure 3: Vision based force planning method system overview

Voice-based Human Machine Interface for Robotics Exoskeleton Glove

This research proposed a voice-based Human-machine Interface (HMI) to interact with an assistive exoskeleton glove designed for paitients with BPI. The key feature of this HMI is the ablility to perform speaker verification. Such HMI is shown in the video below.

Voice-based HMI with speaker verification feature